2024 is nearing its end, and as a last communiqué from this year, I decided to talk a bit about the state of local AI as of today. Since OpenAI has released ChatGPT in the end of 2022, locally running AI has flourished. However, it still remains an elusive technology for many people.

This has primarily three causes. First, the big tech companies are very good at shrouding generative AI in a veil of magic. This convinces many people that they shouldn’t even try to replicate the ability of ChatGPT on their computer. Second, even if they wanted, without an M-series MacBook or a decent gaming laptop, generative AI is still slow.1 This essentially excludes less tech-savvy or poor people. But, lastly, even though we are literally drowning in open weight/open source models of quite small size, using them remains a difficult task.

This is why I would like to review the state of locally running AI and cut through the smokescreen of marketing by the various apps and tools that promise to bring generative AI to people. I also want to try to formulate a few wishes, if you so want, for where local AI should be heading in 2025. I think that local AI and the entire ecosystem that has been burgeoning around llama.cpp is promising. But we need some directionality to make it available to the common computer user, not just a small bunch of tech-savvy people like me who can handle the command line.

At a Glance

Before delving2 into explanations, let me begin with a quick “TL;DR.” As of now, there is only one really useful way of running Large Language Models on your own computer without too much hassle (such as setting up an appropriate PyTorch environment), and that is llama.cpp. Started by Georgi Gerganov shortly after the first Llama model by Meta was accidentally (?) leaked online as a way to quickly run the model locally, it has proven to be the single-most successful way to integrate generative AI locally with computers.

When it comes to you – the user – using generative AI in your applications, there are two primary ways in which any software you use can make the functionality of these models available: either via integrating llama.cpp directly, or via Ollama.

When an app integrates llama.cpp directly by building the DLL/Dylib/SO-file and linking their binary to it, this means that the app itself will be able to load models and use them directly. This means that you have exactly one app that offers both whatever functionality it has, and any generative AI features they included.

When an app integrates with Ollama, on the other hand, the app itself is unable to load models, but instead requires a third-party app — Ollama — to run on your computer in the background. The app will then query that app to enable its AI functionality.

There is a third way that many applications enable but that I won’t go into today: including API access to OpenAI, Mistral, or whichever provider you want to use. That’s not local AI.

Currently, there is no “golden approach” to enabling AI functionality. Each app can essentially choose what to do, even though both are fundamentally the same, as Ollama builds on llama.cpp, which currently forces you to deal with both approaches. And that can become messy. And this is precisely why I am writing these words right now.

llama.cpp

First, let us take a look at llama.cpp. As already mentioned, it’s the local AI pioneer, and it has defined quite many important concepts that we use today. For example, the project devised GGUF model files. This file format essentially means that an entire language model can be packaged into a single file. This is very handy, as it means that everything is self-contained, which eliminates the messiness of previous approaches (SafeTensors or, god forbid, pickles with JSON metadata). Second, the team has, over time, defined a single API to access these models. Lastly, the team has been able to improve performance even on slower machines by sharing model layers between CPU and GPU and quite a lot of amazing code magic that I can’t get into.

llama.cpp is very convenient. By now, bindings exist for most programming languages, allowing app developers to make this functionality available to their users (almost) regardless of which language they use. However, it is still somewhat difficult to do so. While the C++-API is relatively stable, there will always be issues with integrating it; especially if you want to bake llama.cpp into an app written in a different programming language. But once you do, your app is only a few Kilobytes larger, but offers tons of exciting functionality, that, frankly, surpasses Apple’s Writing Tools by a large amount.

However, this route is not often taken, because integrating with llama.cpp this tightly comes at a cost. When doing so, app developers buy into a lot of additional work they need to do:

- Offer a way for users to download models, and guide them through the forest of openly available models, lest users will be downloading something that can maybe cook coffee, but not write good prose.

- The app itself must manage the models, and offer an easy graphical interface for users to do so.

And the issues don’t stop there. If you think about what happens if more and more apps directly integrate with llama.cpp, a few concerns appear:

- When I have two apps that offer AI functionality open at the same time, this means that each will have to load its own model into memory. Since most models roughly fit into the entire system memory of a typical computer, this means that one of the apps, or, worse, both, will be horribly slow and this may even crash your computer.

- Neither app knows of the existence of the other app, so the apps must further implement a way to specify the folder into which any models are downloaded. Otherwise, you’ll have several copies of the same model on your computer. With file sizes in the Gigabytes, this quickly becomes a storage problem.

As of today, many developers (including me with my LocalChat experiment in January of this year) remain blissfully unaware of these caveats, and just store models wherever they see fit. To be fair, we are still not at a stage where every app has AI integration, and most users will use one chat app and copy text back and forth. But one benefit that Apple’s writing tools have is that they work across apps, and don’t require you to leave the app to, e.g., rewrite a paragraph.

This leads me to the second approach.

Ollama

Ollama was started a bit after llama.cpp and it is essentially a wrapper around it that exposes its functionality using a convenient server API. There are several benefits to this approach for both app developers and users:

- App developers don’t have to deal with low-level bindings into the computer’s memory. Instead, they can implement a relatively simple API to expose generative AI functionality to their users.

- Users can just install one additional app and, in a perfect world, have all their apps use that one to provide LLM functionality.

- Each model will be only downloaded once, using only as much storage as absolutely necessary.

- There will never be issues with two apps trying to allocate the entire system memory for a simple task. The switching back-and-forth between models is entirely managed by Ollama.

However, as you might’ve guessed, it’s not as simple as that. While in theory, this approach is definitely the better one, there are still some pitfalls.

First, Ollama does not offer any graphical user interface of its own. This means that, before one can even use a model, the user needs to use the command line to download one. While this is a one-off task, this is a hard no for many non-tech-savvy users who still may want software to respect their privacy. I think that this is a grave oversight.

And second, Ollama only has a reasonable size on macOS. Due to reasons unbeknownst to me, the installers for Windows and Linux are more than one Gigabyte in size.3 It should not be this large, and I hope there will be optimizations.

Excursus: Apple Writing Tools

So where does this leave us? Before sharing my wishes for local AI development in 2025, let me recourse back to Apple. While most readers of this blog do not use Macs themselves, I have had the pleasure of experimenting with Apple’s new “Writing Tools” over the past weeks, and I believe there are lessons to be learned.

Apple has always been a hardware company that happens to make software because they have to. However, especially since the switch to the insanely efficient M-series chips in 2020, Apple has had some form of second awakening. While macOS is clearly still a far cry from the Jobs era, if Apple did one thing right in 2024, it was the user interface for their writing tools.

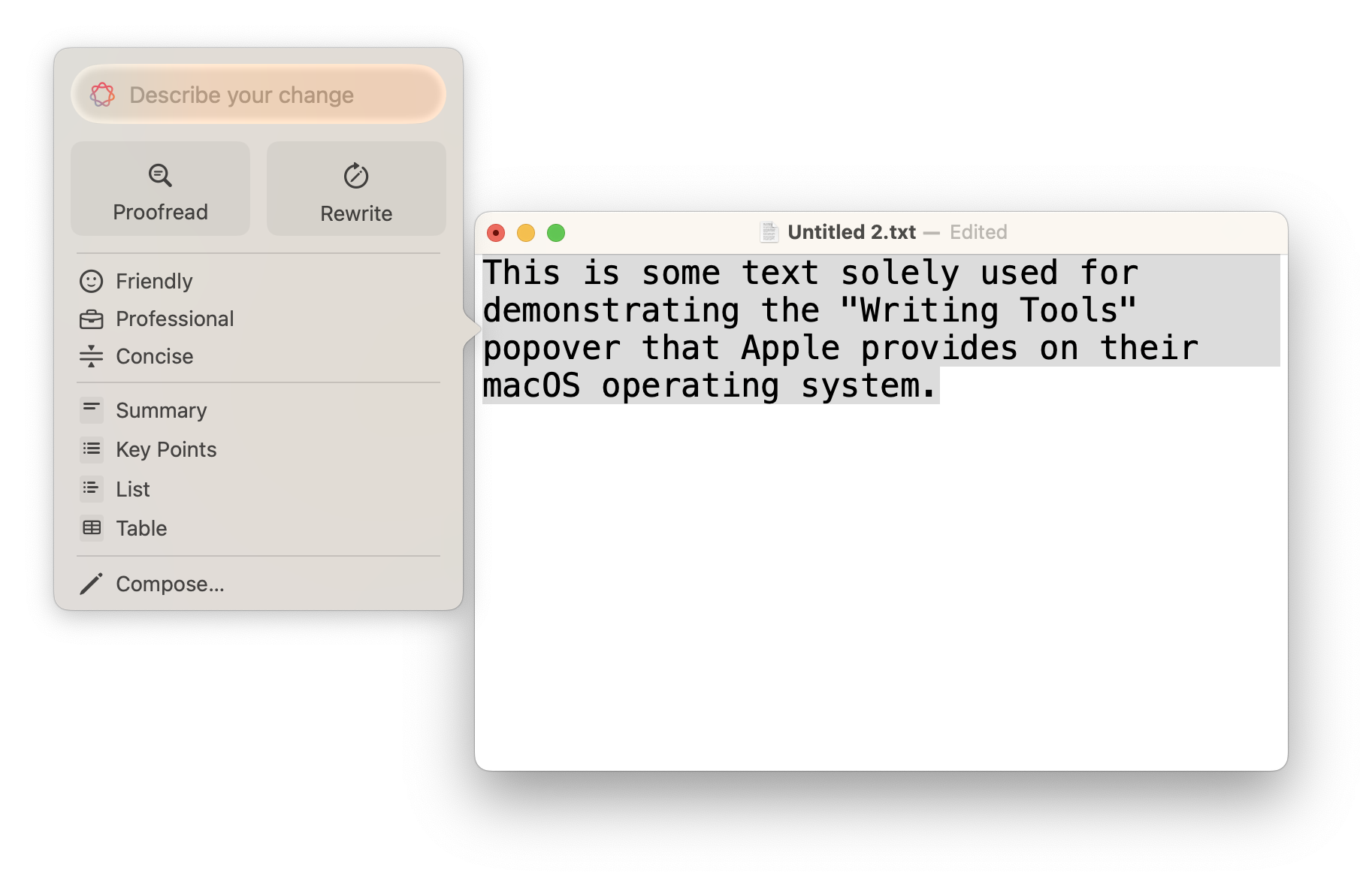

Here’s how this works: You select some text, and a small icon will appear next to the text. If you click on it, a small popover will appear that allows you to perform various operations on the text – from proofreading through reformulating, or changing the text. Some built-in apps such as Mail already use this under the hood to, e.g., summarize email chains.

While this is not yet fully convincing, and Apple will hopefully continue to improve upon the experience, I believe that the general approach is where local AI should be headed, too. Apple’s design consciousness clearly shines in this regard, because this is quite literally one of the most useful use-cases for generative AI at the moment, besides simply “chatting” with some LLM. And I believe that Apple has nailed how it should be done.

In fact, I already have tested out an app that approximates this: Enchanted. It’s macOS only, but it offers a very similar experience. The app suffers from a set of limitations at the moment, but I believe this is the proof of concept we need.

With that being said: over the past year I have identified a few avenues I think local AI developers should take in 2025 that would make the whole space more friendly towards more users, so that we can turn OpenAI’s big plan of “Artificial General intelligence is when we earn $100 billion.” sour.

What I Wish for Local AI in 2025

The biggest hurdle to wide adoption of local AI that software developers can control is the still unfinished appeal of AI tools.

llama.cpp is very powerful, but properly supported only by a handful of apps, most notably GPT4All. Further integration is hampered by the fact that it’s still a very difficult task to integrate the library into existing apps. Bugs are still frequent. This also extends to the apps that integrate llama.cpp. For example, GPT4All still can’t properly do updates and doesn’t use many of the features of the Qt framework that Nomic AI uses. This makes the app ugly. Don’t get me wrong, the core functionality works flawlessly, and RAG with local vector databases works amazing. But it clearly lacks on the GUI side of things.

While Ollama’s setup process is almost comically simple compared to llama.cpp's – download an app, launch it, and start using models – there are some issues I think their developers should tackle. A small tool such as Ollama is better than llama.cpp for two reasons. First, it provides a better separation of concern: Users likely only need one general model, and one coding model (if at all). Having one app exclusively deal with this means that, if users want to try out other models, they know exactly where to go. Frankly, model choice is not something the app should be concerned about besides asking the user which of the available ones to use. But second, app developers can much simpler integrate Ollama’s API into their apps to directly provide the functionality they need.

To make this happen, however, the Ollama devs need to solve three issues, none of which appear insurmountable.

First, Ollama needs a graphical user interface. Right now, it only is a small icon that lives in your menu bar/task bar to indicate that the server is running. When clicking it, users should be able to summon a small preferences window that allows them to perform two things:

- Download, remove, update, and generally manage models and defaults.

- Browse the available models across the various marketplaces and get some help as to which models are good for what. (I think GPT4All does a good job at this.)

Adding such a window is very simple, and would also allow adding some information (such as the version of Ollama). This is quite an easy task.

Second, Ollama needs to figure out why only the Mac app is reasonably sized, while both Windows and Linux installers are huge chonkers and bigger than some of the models it supports. This is already difficult to swallow for users, but is also not insurmountable.

Lastly, Ollama needs wider adoption. The API is well described and looks similar to the OpenAI API. App developers should continue to add Ollama integration so that users don’t just install Ollama for a single app, but actually can reap the benefits of the app across many apps they use.

Then, app developers would be able to stop integrating with llama.cpp directly, and instead only hook into the API by Ollama. This would mean some centralization, yes, but as we have learned with social media over the past years, some form of centralization is crucial if we want great things to happen.

This is also a wish I have for 2025: We need fewer apps that offer ChatGPT/Ollama/some other chatbot integrations, and more focus on supporting the viable options that are out there already. We need more energy focusing on llama.cpp, Ollama, and fixing these issues so that a common way of integrating AI becomes possible.

Finally, I believe that developers would do well taking Apple as an example. The two primary use cases of generative AI right now are text-focused and chat, so it makes sense to offer a similar “open” approach to writing tools as Apple. I want an app that offers me to use whatever model I want to perform the writing tasks and that stays out of my way similar to Apple’s Writing Tools. The “stay out of the way” is something crucial. Why not offer a Siri-like interface to ask an actual local model rather than the still-lobotomized machine learning that Siri has?

Now, I know that talk is cheap, so I won’t be mad if not all of this happens. But I think that we’re already 80% down the way to enabling mass adoption of open weight/open source generative LLMs. As always with Pareto-ratios, the final 20% to even make non-tech-savvy users switch are the hardest. I am looking optimistically into 2025, because I think the issues I’ve identified are quick to solve for the community, and then it’s only on me and my colleagues from other Open Source apps to actually integrate generative AI productively. I’m happy to do my part.

That’s it — that’s 2024 on this blog. Let me finish this article by wishing you a Happy New Year and all the best for your 2025. May the world take a turn for the better for once.

See you next year!

Albeit I would like to stress that Microsoft’s Phi models have become quite capable over the last year; and I even managed to get one running on my potato server with an almost acceptable speed. ↩

Did you for a short moment think that I may have written this article using LLMs? If so, you have been paying attention over the past year, very good! ↩

This becomes even funnier once you realize that the macOS app is actually just an Electron wrapper, decried for being “bloated” and just “bad”. ↩