Choo! Choo! The hypetrain is back in town, and this time it has brought with it … ✨ ChatGPT! ✨ Twitter, Mastodon, blogs, even some of my email listservs are full of people chatting about ChatGPT. It’s basically a viral TikTok hit for people with technical interest. But the worst about all of this? Thousands of people are running a large-scale advertising campaign for a for-profit company. For free! There is literally nothing in it for anyone of us. ChatGPT is not a technological breakthrough, it is not special in any way except that people can now get some hands-on experience with a GPT model which previously was hidden behind an extensively monitored API that was tied to hefty non-disclosure clauses. The difference between GPT 3 and GPT 3.5 (a.k.a. ChatGPT) is literally that you can now share screenshots from raw output of the model. That’s it!

Soooo… it’s a fad. Yes, I’m that guy. The fun-crusher. I don’t know if that is due to my Germanness or because I have to deal with these systems on a daily basis, but while ChatGPT has a certain appeal to it, it does not deserve the hype it receives. Let me tell you why.

But first thank you for not closing the browser tab in a fury because you think I am an idiot/a fool/a Luddite!

So, language models – more specifically the family of large language models (LLM) a.k.a. GPT-3 or LaMDA – have been around for quite some time by now. It all started with the conception of transformer models by Google’s research division in 2017 (Vaswani et al., 2017). Ever since, language models got bigger and bigger, but not an inch smarter (Bender et al., 2021). Yes, they have become capable of generating comprehensible text with a much higher probability than five years ago, but they’re still not great. It is very nice that we have better and better models (I’m using them myself in my research), but I am always a tad appalled by the hype and the unreflected anthropomorphism that accompanies basically any new blogpost on OpenAI’s, Google’s, or Facebook’s website.

I want to provide a set of arguments in this article that hopefully bring back some realism into this whole mess without completely discouraging anyone from experimenting with large language models. These are: (a) large language models have no comprehension of meaning (b) ChatGPT is not a breakthrough (c) OpenAI is not our friend (d) your job will not be taken over by a machine and (e) no, it’s not sentient.

None of these arguments are in any way new or original, but it really is necessary to repeat them over and over again, because it is hard for researchers and practitioners to fight the advertising campaigns of trillion-dollar companies. So, shall we?

Large Language Models are Still Stupid

In 2021, a very special paper has been published that in the discipline is commonly just abbreviated the “Stochastic Parrots” paper. It is possibly the only paper that includes an Emoji in its title and has been a collaboration by some very smart women in tech – one of which got fired from Google based on that paper: Emily Bender, Timnit Gebru, Angelina McMillan-Major and Margaret Mitchell (2021). What they basically state, later joined by Meredith Whittaker (2021), is that ever since the original transformers paper was released in 2017, language models simply got bigger without an inch of more understanding.

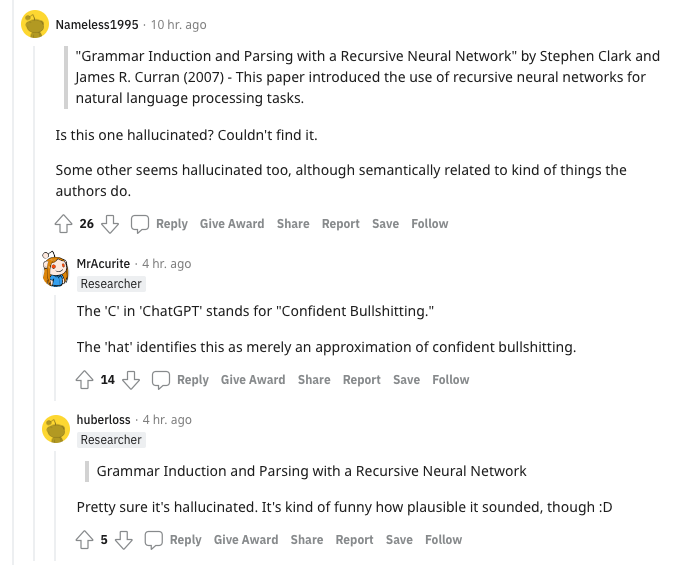

Current language models have parameter counts running in the trillions but even in the output of ChatGPT, one can clearly see that it has no idea what it’s “talking” about. What it does sounds convincing but ultimately it is hollow. Take for example this insightful exchange on Reddit earlier today:

ChatGPT really has no idea what it’s talking about, but it is good at convincing you. There is no paper titled “Grammar Induction and Parsing with a Recursive Neural Network” that has been published in 2007. But, and that’s the trick ChatGPT has up its sleeve: All of the terms have a high probability of occurring where they do: They form a correct grammatical construct and individually, the terms are indeed connected to AI research. But in this constellation they simply do not exist.

To use Noam Chomsky’s old allegory, ChatGPT is spitting out a stream of “Colorless green ideas sleep furiously”. This leads me to another important point. This has been made by John M. Berger on his blog:

In other words, the first measure of success for a generative A.I. app is whether it can persuade you to accept an untrue premise.

Similar variations on this theme can be found in a Wired-article — but please read Emily Bender’s clarification on Twitter as well, as the article unfortunately also includes some difficult statements!

The stakes that transformer-based generative language models have to fulfill are not to actually become sentient, but to fool humans. And this is already happening. Many faculty members on Twitter and Mastodon are already worrying that students could cheat on their exams by having ChatGPT write their essays for them. The first debates have broken out as to whether the advent of ChatGPT now means that students can cheat without us noticing. To that, however, I would confidently answer that it’s much easier to identify generated gibberish than plagiarism.

To detect plagiarism you may “only” need to run string-based comparisons of the essay in question against a database of published papers. But do you know how difficult it is to construct such a database? And also, do you know how much that infringes on the copyright for any student? That’s a different discussion, however. The point I want to make is: To identify generated text you have two options: either you read it and pretty quickly realize that it’s sophisticated sounding gibberish – or you can just literally count words.

Yes, the old method used to de-anonymize the authors of the Federalist Papers can defeat technology that requires a multitude more of compute than Mosteller and Wallace (1963) had in their original paper almost sixty years ago. This almost feels like an insult to me. At least since 2014, if not earlier, we know how easy it is to fool neural networks – please go and have a look at the magnificent Szegedy-paper (Szegedy et al., 2014) – and yet, since the inception of transformer models, this whole discussion somehow got forgotten, because everyone was too busy carrying the new godchild of some tech corporation across town.

Which leads me to another issue I have with ChatGPT: It almost appears as if ChatGPT is a breakthrough, but is it, though? (Of course it isn’t as you can see literally below this paragraph, but good style requires me to pose a rhetorical question from time to time.)

ChatGPT is not a Breakthrough in Language Generation

But why isn’t it a breakthrough? To answer this question, let’s first focus on its counterfactual: Why is it a breakthrough? A breakthrough is colloquially thought to be when someone manages to achieve something that many people before couldn’t do. But ChatGPT has no property that would allow to make that statement. It uses the transformer-architecture which has been invented five years ago. It uses more parameters than models before it, which Google did before as well. It is intended for use as a chatbot, which LaMDA did before it. It uses all the ingredients that we know already. The only thing that makes it somehow exciting is that the public suddenly has access to it and can play around with it.

And this should make you curious: why can the public suddenly just interact with it? To refresh your memory, ChatGPT’s predecessor – GPT-3 – was shielded behind many hurdles one had to take before being able to interact with. Just last year, Tom Scott has made a very instructive video that demonstrates just how cautious the folks at OpenAI were with regard to their model:

Now, OpenAI’s terms of service don’t let me give you the full list. I have to curate them, and show you a sample. Those are the terms and conditions I agreed to. (1:43–1:50)

ChatGPT is based on OpenAI’s GPT-3.5 model. In other words: An (ostensibly) better model than GPT-3, and that less than two years later. What made OpenAI suddenly change their mind, and instead of requiring people who interact with the model to curate its output and never share its full output, and now they are absolutely happy with thousands of people sharing the raw model output with the world?

This leads me to the next argument:

OpenAI is Not Your Friend

OpenAI is a for-profit company. This means it must become profitable at some point. Also, it does not have an obligation to share any of its research with the public. It can do – and has done it in the past – but it does not have to. GPT-3 is still locked away, and ChatGPT can only be used from OpenAI’s website. If they want to, they can just pull the plug and remove access for everyone in an instant. The way OpenAI can make money is by selling access to its models to companies who are looking for a cheap human-replacement chatbot that is not as stupid as some hard-coded support-systems. And it does so by using you (or some friend of you, or at least someone you follow on Twitter).

OpenAI sells licenses for API-access to GPT-3 profitably to companies across the globe, and it will likely do the same with ChatGPT. Tom Goldstein has done a rough napkin-calculation over on Twitter and estimated that OpenAI possibly needs to pay $100,000 a day just to keep the servers running that power ChatGPT. That is a lot of money. It’s roughly four times the amount of money a Swedish PhD student makes in a year. And OpenAI needs to come up with this every day.

I have a very simple theory for why OpenAI has invited the larger public to tinker with its new toy: It’s free advertisement.

Think about it: In order to make companies pay money for some computer system, OpenAI needs to convince some overpaid middle-management guys that their system is better than the existing call-center infrastructure that many mid-sized companies maintain — either in terms of money or in terms of quality. But middle management is notorious for being dense and stingy. So OpenAI needs to somehow convince these folks that their new fancy toy is a worthy investment. And how do they do that? By showing them how well their model is. And how can OpenAI show them the capabilities of their tool? By shoving it up the timelines of said middle managers.

By having random Twitter users share screenshot after screenshot of output generated by their system, it is basically impossible to evade mentions of ChatGPT if you don’t live under a rock. And in this way, OpenAI can lure in those people who can decide on large amounts of money and get lucrative contracts that re-finance the costs of running the model 24/7.

An advertisement campaign can easily exceed $100,000 a day, so depending on how far-reaching the talk about ChatGPT makes it, it can actually be a very cheap campaign. OpenAI does not have to pay you for sharing evidence of the quality of their model. It does not have to pay money to YouTubers and other content creators to make them talk about ChatGPT. They do not have to sponsor. They do not have to pay for ads. Just some servers.

To be honest, I kind of appreciate the wit with which OpenAI markets its products. But enough on this topic, I think I have made clear my point. TL;DR: Do not share screenshots of ChatGPT where you state how cool that thing is. OpenAI makes money from your free labor. Which takes me to the next point.

You Will Not Be Replaced by a Machine

While faculty is worried that students could cheat on their essays, software developers are worried that they’ll be replaced by a machine in the near future. Before I begin elaborating: No, none of you will be replaced. Don’t worry.

Some people have tried and successfully made ChatGPT write code. Some redditor has even managed to have ChatGPT invent a new programming language from scratch. In general, programming-related Subreddits are currently swamped with ChatGPT-related posts. Linus Sebastian has even dedicated a lot of time debating ChatGPT a few days ago on WAN show, which includes his co-host Luke spending considerable time talking about the programming-abilities of the model.

So, will you be replaced? This discussion sounds eerily similar to debates that arose not so long ago when GitHub introduced its own model, “GitHub Copilot”. Basically, GitHub Copilot is a type of “ChatGPT” but just for code. With Copilot you can write out in a comment what you want to achieve and have the model write the corresponding function for you. Neat, right? Well, almost. Pretty soon, people discovered that Copilot was literally copy-pasting code from their own projects into random strangers’ programs. Currently, there is a huge class-action lawsuit against GitHub on the grounds of its atrocious software-piracy. You may infringe upon students’ copyright unscathed, but you do not do that with software developers. Those people bite back.

You know what the best thing about Copilot is? It is based on OpenAI’s Codex model! This is just funny at this point.

But I digress. As a case in point, there are two ways in which an AI model can write code: It either comes up with fantasy code, in which case the chances of the code actually compiling is very low. Or it can just pirate other people’s code. So, no. No software developer will be replaced by their own brainchildren.

But what about other people, with easier-to-replace jobs? For example call center workers? “These people surely have to fear for their jobs, right?”

Well, Google tried to replace call center workers with its “AI Assistant”. That was in 2018. Do we have “AI Assistant” today? No, we haven’t. This should give you enough of a hint to judge how likely it is that call center employees will lose their job in the next decade.

In fact, there is even a term for this: Automation Anxiety (Akst, 2013). People ever since the industrial revolution feared to become replaced by machines. The first were the Luddites, the “machine smashers” who worked in the textile industry and who attempted to destroy the machinery they feared would replace them soon. That was in the 19th century – more than a century ago. And, have they been replaced? Just google “sweatshop Bangladesh” to get an up-to-date answer. A similar fear came up again sometime in the 1960s. David Autor wrote a very good piece on Automation Anxiety back in 2015, asking “Why Are There Still So Many Jobs?” (2015)

A slightly different argument can even be found in Karl Marx’s writings. Karl Marx ridicules the Luddites, arguing that they have attacked the wrong guy: instead of smashing machinery, they would’ve been better off smashing industrialists, according to him. If you are willing to translate some dusty German, I have written about this in 2018 and argued for why – at least under capitalism – it’s pretty unlikely that any machine will replace you. You can find the full text on ResearchGate.

No AI Model is Sentient

A last point should be made here, just for the sake of completeness. If it hasn’t already become clear: No, ChatGPT is also not sentient. People tend to anthropomorphize artificial intelligence pretty quickly, so I feel that I should always add this as a reminder – not just for you, but for me as well. Because yes, there is some beauty in believing that some machine can lead a conversation with you. But it can’t, and we all – me included – need to remind ourselves about this fact from time to time.

Where does that leave us? Well, language models and AI more generally is here to stay. And that is perfectly fine. I honestly like the progress that computer scientists have made over the last century. My whole dissertation is based on language models. But we still have a long way to go. And even transformer models are slowly approaching their end of life. Computer scientists are looking towards new horizons, such as compositional AI. But ChatGPT is not a breakthrough. And OpenAI needs to make money. So with all of the amazement that can be found with ChatGPT, remember: an AI is still just a computer program. A tool to help you. Nothing more. Nothing less.

References

- Akst, D. (2013). Automation Anxiety. The Wilson Quarterly (1976-), 37(3). http://www.jstor.org/stable/wilsonq.37.3.06

- Autor, D. H. (2015). Why Are There Still So Many Jobs? The History and Future of Workplace Automation. The Journal of Economic Perspectives, 29(3), 3–30. http://www.jstor.org/stable/43550118

- Bender, E. M., Gebru, T., McMillan-Major, A., and Mitchell, M. (2021). On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜. Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 610–623. https://doi.org/10.1145/3442188.3445922

- Mosteller, F., and Wallace, D. L. (1963). Inference in an Authorship Problem: A Comparative Study of Discrimination Methods Applied to the Authorship of the Disputed Federalist Papers. Journal of the American Statistical Association, 58(302), 275–309. https://doi.org/10.1080/01621459.1963.10500849

- Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan, D., Goodfellow, I., and Fergus, R. (2014). Intriguing properties of neural networks. arXiv:1312.6199 [cs]. http://arxiv.org/abs/1312.6199

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., and Polosukhin, I. (2017). Attention Is All You Need. arXiv:1706.03762 [Cs]. http://arxiv.org/abs/1706.03762

- Whittaker, M. (2021). The steep cost of capture. Interactions, 28(6), 50–55. https://doi.org/10.1145/3488666